Susannne De Mooj, PhD student at the Centre for Brain and Cognitive Development, tells us about the work she has been doing in collaboration with Dutch educational company, Oefenweb, using data from over 300,000 individuals using the company’s maths and language e-learning apps. Online learning environments have the ability to continuously adapt and accommodate differences between learners, and changes within individual learners over time. Susanne is investigating innovative ways these apps may be tailored to enhance different aspects of the online learning experience.

Susannne De Mooj, PhD student at the Centre for Brain and Cognitive Development, tells us about the work she has been doing in collaboration with Dutch educational company, Oefenweb, using data from over 300,000 individuals using the company’s maths and language e-learning apps. Online learning environments have the ability to continuously adapt and accommodate differences between learners, and changes within individual learners over time. Susanne is investigating innovative ways these apps may be tailored to enhance different aspects of the online learning experience.

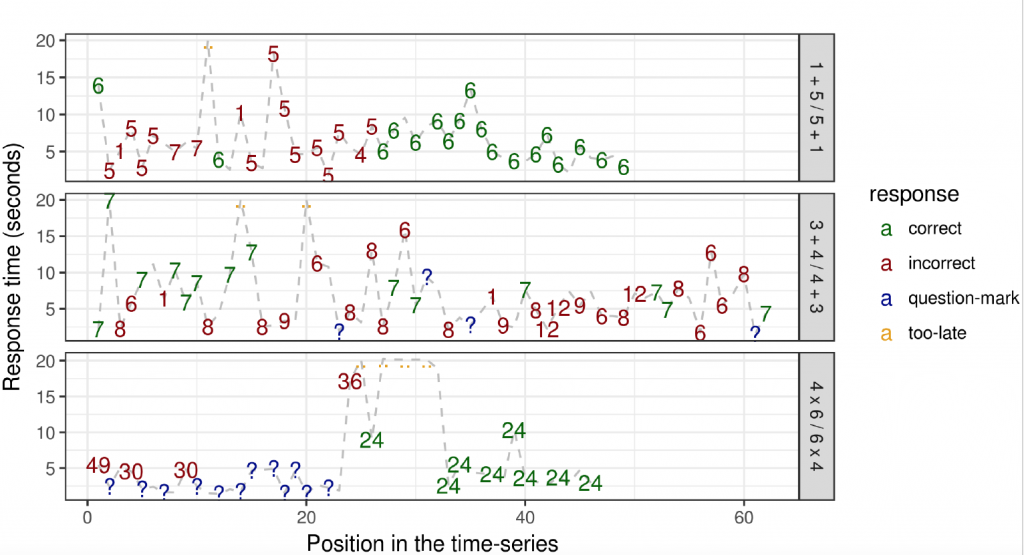

Learning is an inconceivably complex system, as many elements interact with each other; general mental ability, prior knowledge, learning styles, personality characteristics, motivation, anxiety, and many more. These interactions over time result in extensive individual differences in the cognitive trajectory, making it difficult for education and research to optimise learning for everyone. Online learning environments can have a positive impact on education and individual students in general by providing individualized computer adaptive practice and monitoring tools. Tracking the individual development of both accuracy and response time can shed some new light on the complexity of learning, which is illustrated below by three individual time series of children practicing single mathematical problems (i.e. 1 + 5) for a long period (adapted from Brinkhuis et al., 2018). For example, in the upper and low panel of this figure you see a three-stage pattern, moving from mainly incorrect response, to fast correct responses. On the other hand, the middle panel shows a child who does not learn 3 + 4, while practicing this item for 61 times, with some correct responses alternated with errors. The highly variable patterns within and between the learners shows that tailoring and monitoring their learning experience is essential.

Cognitive profile and time perception. Any learning environment (offline or online) creates a certain cognitive load particularly to attention and working memory. This load can be increased through the presence of irrelevant objects/information (e.g. gamified sounds, flashing objects, alternative answer options), but also through external stimuli such as worried thoughts about performance. These stressors can either drive children to use more efficient strategies or it could compete with the attention necessary to learn new skills. One particular stressor used in a lot of game-based learning platforms and experiments, is time pressure. In one of our smaller sample studies we found that the presence of the time pressure on the screen has an impact on maths performance. Critically, we found that an individual’s ability to inhibit irrelevant information is key to whether this has an impact on the learning experience. Eye movement patterns in this study also showed that whether time pressure is visible or not in the learning environment in combination with their cognitive profile affects where and how much the children attend to. Although bigger studies are needed, time perception and individual cognitive profile are features that we might need to consider in adaptive frameworks.

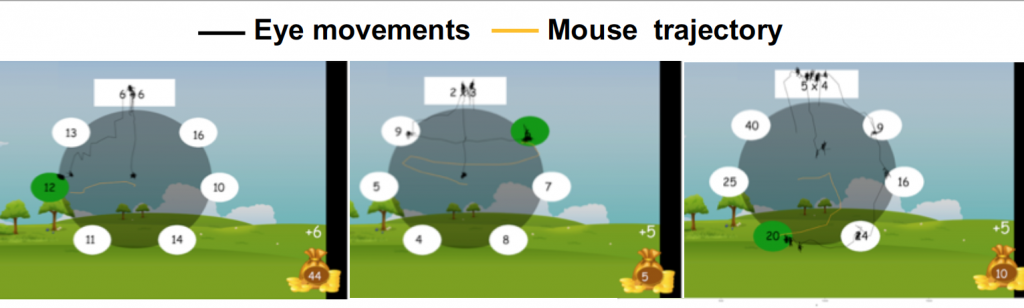

Mouse tracking. In most educational tools, the most widely used indicators of learning are response time and accuracy, as shown in the individual time series figure. Although these measurements are well-suited to indicate the overall performance, they cannot be used as continuous measures of the underlying cognitive process. A promising online measure designed to track the timing of evolving mental processes is mouse tracking (Freeman, Dale, & Farmer, 2011; Song & Nakayama, 2009; Spivey, Grosjean, & Knoblich, 2005). For this paradigm, the speed and movement of a mouse point as well as where it is placed on the screen is tracked to see how much attention the user pays to certain stimuli. Mouse tracking is becoming popular on commercials website as a way to gain insight into behaviour, however it is also available and potentially useful for researchers, see for example the recent implementation in the online experiment builder Gorilla. One of our current studies tracks the mouse movements of 100.000 active users for a month while practicing arithmetic skills. Specifically, we are interested in the attraction towards alternative answer options, not selected as response, to get a better understanding of the user’s possible misconceptions. The aim of this online technology-based assessment of the misconceptions is to adapt feedback and instruction on an individual basis.

Mouse tracking. In most educational tools, the most widely used indicators of learning are response time and accuracy, as shown in the individual time series figure. Although these measurements are well-suited to indicate the overall performance, they cannot be used as continuous measures of the underlying cognitive process. A promising online measure designed to track the timing of evolving mental processes is mouse tracking (Freeman, Dale, & Farmer, 2011; Song & Nakayama, 2009; Spivey, Grosjean, & Knoblich, 2005). For this paradigm, the speed and movement of a mouse point as well as where it is placed on the screen is tracked to see how much attention the user pays to certain stimuli. Mouse tracking is becoming popular on commercials website as a way to gain insight into behaviour, however it is also available and potentially useful for researchers, see for example the recent implementation in the online experiment builder Gorilla. One of our current studies tracks the mouse movements of 100.000 active users for a month while practicing arithmetic skills. Specifically, we are interested in the attraction towards alternative answer options, not selected as response, to get a better understanding of the user’s possible misconceptions. The aim of this online technology-based assessment of the misconceptions is to adapt feedback and instruction on an individual basis.

Large scale measure of (dis)engagement.

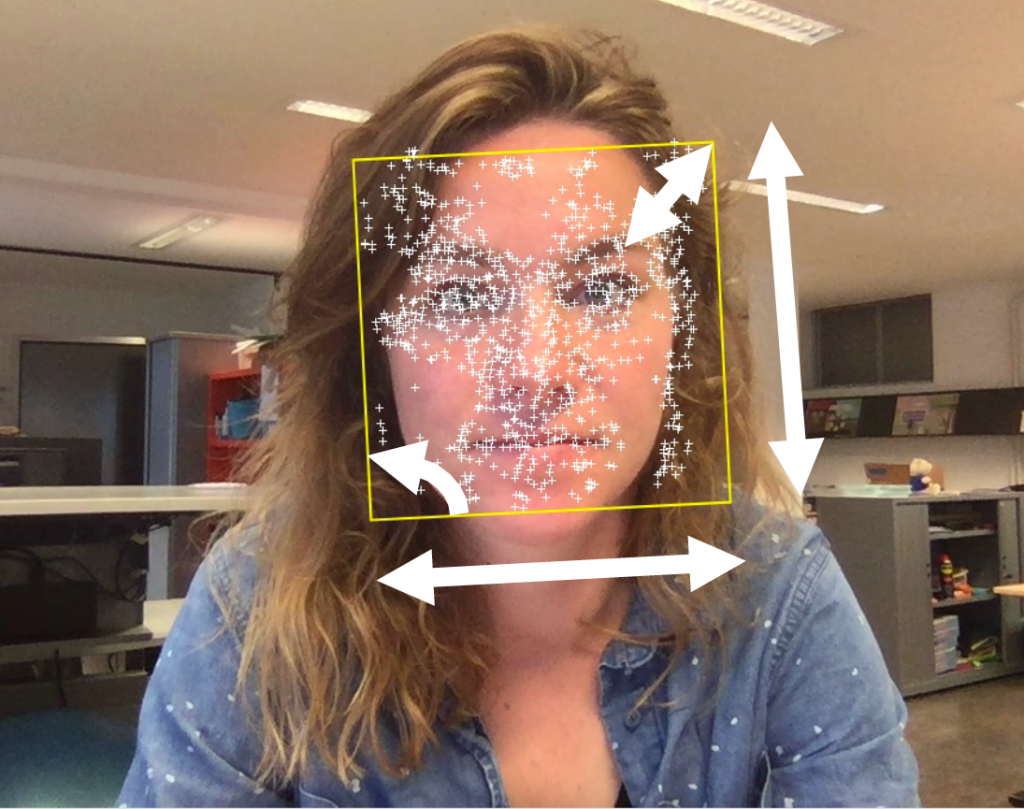

The way a student engages in learning is essential to their experience. Engagement is  mostly defined as attentional and emotional involvement with a task (Christenson, Reschly, & Wylie, 2012). However engagement is not stable, but fluctuates throughout the learning experience. Different measures are used to assess initial but also sustained engagement, such as self-report questionnaires, heart rate changes, pupil dilation and emotion detection. For large scale, online detection, head movement has been proposed as an estimate of the dynamics of the user’s attentional state. Generally, studies find that head size, head posture and head position successfully capture engagement, such that when the person is deeply engaged, movement is less and when distracted/bored more head movement follows. To measure engagement within an online learning platform or in typical psychological experiments, we use an automated detection algorithm where we track (multiple) faces with a simple webcam during the learning experience or afterwards from videos. Our current study (N=83 children, 8-12 years old) investigates how head movement relates to both emotional and cognitive engagement and whether we can predict whether children are in the ‘flow’ or are about to disengage from the task. Hopefully, this will enable us to prevent high dropouts and adapt the presentation and content to the individual learner’s state.

mostly defined as attentional and emotional involvement with a task (Christenson, Reschly, & Wylie, 2012). However engagement is not stable, but fluctuates throughout the learning experience. Different measures are used to assess initial but also sustained engagement, such as self-report questionnaires, heart rate changes, pupil dilation and emotion detection. For large scale, online detection, head movement has been proposed as an estimate of the dynamics of the user’s attentional state. Generally, studies find that head size, head posture and head position successfully capture engagement, such that when the person is deeply engaged, movement is less and when distracted/bored more head movement follows. To measure engagement within an online learning platform or in typical psychological experiments, we use an automated detection algorithm where we track (multiple) faces with a simple webcam during the learning experience or afterwards from videos. Our current study (N=83 children, 8-12 years old) investigates how head movement relates to both emotional and cognitive engagement and whether we can predict whether children are in the ‘flow’ or are about to disengage from the task. Hopefully, this will enable us to prevent high dropouts and adapt the presentation and content to the individual learner’s state.